Computer vision is a field experiencing significant growth and has numerous practical applications, ranging from self-driving cars to facial recognition systems. However, one of the major challenges in this field is obtaining high-quality datasets to train machine learning models.

To address this challenge, torchvision provides access to pre-built datasets, models and transforms specifically designed for computer vision tasks. Torchvision also supports both CPU and GPU acceleration, making it a flexible and powerful tool for developing computer vision applications.

What are “Torchvision Datasets”?

Torchvision datasets are collections of popular datasets commonly used in computer vision for developing and testing machine learning models. With torchvision datasets, developers can train and test their machine learning models on a range of tasks, such as image classification, object detection, and segmentation.

The datasets are also preprocessed, labeled and organized into formats that can be easily loaded and used.

List of the Torchvision Datasets

- MNIST

- CIFAR-10

- CIFAR-100

- ImageNet

- COCO

- Fashion-MNIST

- SVHN

- STL-10

- CelebA

- Pascal VOC

- Places365

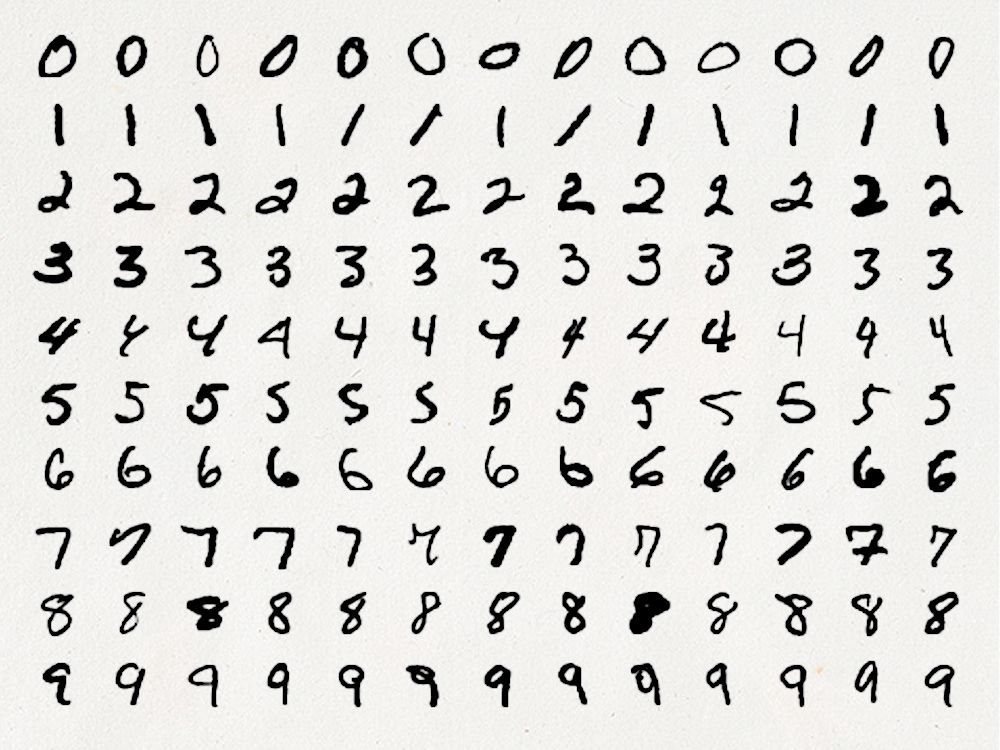

1. MNIST

This torchvision dataset is popular and widely used in the fields of machine learning and computer vision. It consists of 70,000 grayscale images of handwritten digits 0–9, with 60,000 images for training and 10,000 for testing. Each image is 28x28 pixels in size and has a corresponding label denoting which digits it represents.

To access this dataset, you can download it directly from

import torchvision.datasets as datasets

# Load the training dataset

train_dataset = datasets.MNIST(root='data/', train=True, transform=None, download=True)

# Load the testing dataset

test_dataset = datasets.MNIST(root='data/', train=False, transform=None, download=True)

Code for loading MNIST dataset using PyTorch torchvision package. Retrieved from https://pytorch.org/vision/stable/generated/torchvision.datasets.MNIST.html#torchvision.datasets.MNIST on 20/3/2023.

2. CIFAR-10

The CIFAR-10 dataset consists of 60,000 32x32 colour images in 10 classes, with 6,000 images per class. It has a total of 50,000 training images and 10,000 test images which is further divided into five training batches and one test batch, each with 10,000 images.

This dataset can be downloaded from

import torch

import torchvision

import torchvision.transforms as transforms

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True,

download=True, transform=transform)

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4,

shuffle=True, num_workers=2)

testloader = torch.utils.data.DataLoader(testset, batch_size=4,

shuffle=False, num_workers=2)

Note that you can adjust the batch size and number of worker processes for the data loaders as needed.

Code for loading CIFAR-10 dataset using PyTorch torchvision package. Retrieved from https://pytorch.org/vision/stable/generated/torchvision.datasets.CIFAR10.html#torchvision.datasets.CIFAR10 on 20/3/2023.

3. CIFAR-100

The CIFAR-100 dataset has 60,000(50,000 training images and 10,000 test images) 32x32 colour images in 100 classes, with 600 images per class. The 100 classes are grouped into 20 super-classes, with a fine label to denote its class and a coarse label to represent the super-class that it belongs to.

To download the torchvision dataset from Kaggle, please visit the Kaggle

import torchvision.datasets as datasets

import torchvision.transforms as transforms

# Define transform to normalize data

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

])

# Load CIFAR-100 train and test datasets

trainset = datasets.CIFAR100(root='./data', train=True, download=True, transform=transform)

testset = datasets.CIFAR100(root='./data', train=False, download=True, transform=transform)

# Create data loaders for train and test datasets

trainloader = torch.utils.data.DataLoader(trainset, batch_size=64, shuffle=True)

testloader = torch.utils.data.DataLoader(testset, batch_size=64, shuffle=False)

Code for loading CIFAR-100 dataset using PyTorch torchvision package. Retrieved from https://pytorch.org/vision/stable/generated/torchvision.datasets.CIFAR100.html#torchvision.datasets.CIFAR100 on 20/3/2023.

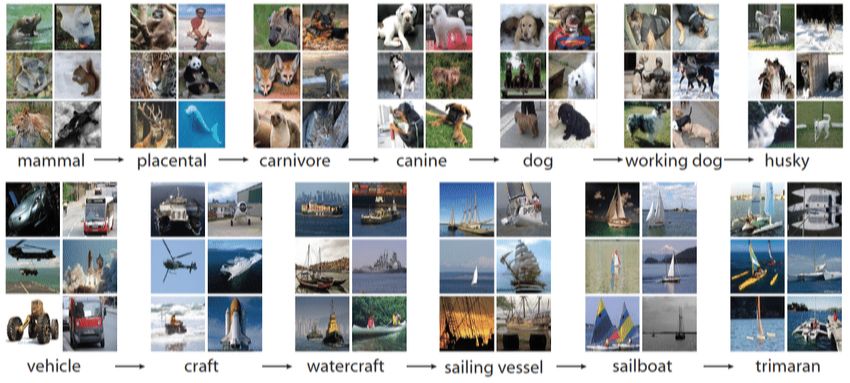

4. ImageNet

The ImageNet dataset in torchvision contains approximately 1.2 million training images, 50,000 validation images and 100,000 test images. Each image in the dataset is labeled with one of the 1,000 categories such as "cat," "dog," "car,", "airplane" etc.

To download this torchvision dataset, you have to visit the

import torchvision.datasets as datasets

import torchvision.transforms as transforms

# Set the path to the ImageNet dataset on your machine

data_path = "/path/to/imagenet"

# Create the ImageNet dataset object with custom options

imagenet_train = datasets.ImageNet(

root=data_path,

split='train',

transform=transforms.Compose([

transforms.Resize(256),

transforms.RandomCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

]),

download=False

)

imagenet_val = datasets.ImageNet(

root=data_path,

split='val',

transform=transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

]),

download=False

)

# Print the number of images in the training and validation sets

print("Number of images in the training set:", len(imagenet_train))

print("Number of images in the validation set:", len(imagenet_val))

Code for loading ImageNet dataset using PyTorch torchvision package. Retrieved from https://pytorch.org/vision/stable/generated/torchvision.datasets.ImageNet.html#torchvision.datasets.ImageNet on 21/3/2023.

5. MS Coco

The Microsoft Common Objects in Context(MS Coco) dataset contains 328,000 high-quality visual images of everyday objects and humans, often used as a standard to compare the performance of algorithms in real-time object detection.

To download this torchvision dataset, please visit the

import torch

from torchvision import datasets, transforms

# Define transformation

transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

# Load training dataset

train_dataset = datasets.CocoDetection(root='/path/to/dataset/train2017',

annFile='/path/to/dataset/annotations/instances_train2017.json',

transform=transform)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=32, shuffle=True)

# Load validation dataset

val_dataset = datasets.CocoDetection(root='/path/to/dataset/val2017',

annFile='/path/to/dataset/annotations/instances_val2017.json',

transform=transform)

val_loader = torch.utils.data.DataLoader(val_dataset, batch_size=32, shuffle=False)

Make sure to replace the /path/to/dataset placeholders with the actual path to your dataset directory. Also, adjust the batch_size parameter to fit your needs.

Code for loading MS Coco dataset using PyTorch torchvision package. Retrieved from https://pytorch.org/vision/stable/generated/torchvision.datasets.CocoDetection.html#torchvision.datasets.CocoDetection on 21/3/2023.

6. Fashion-MNIST

The Fashion MNIST dataset was created by Zalando Research as a replacement for the original MNIST dataset. The Fashion MNIST dataset consists of 70,000 grayscale images (training set of 60,000 and a test set of 10,000) of clothing items.

The images are 28x28 pixels in size and represent 10 different classes of clothing items, including T-shirts/tops, trousers, pullovers, dresses, coats, sandals, shirts, sneakers, bags, and ankle boots. It is similar to the original MNIST dataset, but with more challenging classification tasks due to the greater complexity and variety of the clothing items.

This torchvision dataset can be downloaded from

import torch

import torchvision

import torchvision.transforms as transforms

# Define transformations

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))])

# Load the dataset

trainset = torchvision.datasets.FashionMNIST(root='./data', train=True,

download=True, transform=transform)

testset = torchvision.datasets.FashionMNIST(root='./data', train=False,

download=True, transform=transform)

# Create data loaders

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4,

shuffle=True, num_workers=2)

testloader = torch.utils.data.DataLoader(testset, batch_size=4,

shuffle=False, num_workers=2)

Code for loading Fashion-MNIST dataset using PyTorch torchvision package. Retrieved from https://pytorch.org/vision/stable/generated/torchvision.datasets.FashionMNIST.html#torchvision.datasets.FashionMNIST on 21/3/2023.

7. SVHN

The SVHN (Street View House Numbers) dataset is an image dataset derived from Google’s Street View imagery, which consists of cropped images of house numbers taken from street-level images. It is available in a full format with all the house numbers and their bounding boxes and a cropped format with just the house numbers alone. The full format is often used for object detection tasks, while the cropped format is commonly used for classification tasks.

The SVHN dataset is also included in the torchvision package and it contains 73,257 images for training, 26,032 images for testing and 531,131 additional images for extra training data.

To download this torchvision dataset, you can go to

import torchvision

import torch

# Load the train and test sets

train_set = torchvision.datasets.SVHN(root='./data', split='train', download=True, transform=torchvision.transforms.ToTensor())

test_set = torchvision.datasets.SVHN(root='./data', split='test', download=True, transform=torchvision.transforms.ToTensor())

# Create data loaders

train_loader = torch.utils.data.DataLoader(train_set, batch_size=64, shuffle=True)

test_loader = torch.utils.data.DataLoader(test_set, batch_size=64, shuffle=False)

Code for loading SVHN dataset using PyTorch torchvision package. Retrieved from https://pytorch.org/vision/stable/generated/torchvision.datasets.SVHN.html#torchvision.datasets.SVHN on 22/3/2023.

8. STL-10

The STL-10 dataset is an image recognition dataset that consists of 10 classes, with a total of about 6,000+ images. The STL-10 stands for “Standard Training and Test Set for Image Recognition-10 classes” and the 10 classes in the dataset are:

- Airplane

- Bird

- Car

- Cat

- Deer

- Dog

- Horse

- Monkey

- Ship

- Truck

To access this dataset, you can download it directly from

import torchvision.datasets as datasets

import torchvision.transforms as transforms

# Define the transformation to apply to the data

transform = transforms.Compose([

transforms.ToTensor(),

# Convert PIL image to PyTorch tensor

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)) # Normalize the data

])

# Load the STL-10 dataset

train_dataset = datasets.STL10(root='./data', split='train', download=True, transform=transform)

test_dataset = datasets.STL10(root='./data', split='test', download=True, transform=transform)

Code for loading STL-10 dataset using PyTorch torchvision package. Retrieved from https://pytorch.org/vision/stable/generated/torchvision.datasets.STL10.html#torchvision.datasets.STL10 on 22/3/2023.

9. CelebA

This torchvision dataset is a popular large-scale face attributes dataset which comprises over 200,000 celebrity images. It was first released by researchers at the Chinese University of Hong Kong in 2015. An image in the CelebA consists of 40 facial attributes such as age, hair colour, facial expression and gender. Also, these images were retrieved from the internet and cover a wide range of facial appearances, including different races, ages and genders. Bounding box annotations for the location of the face in each image, as well as 5 landmark points for the eyes, nose and mouth.

You can download this dataset on

import torchvision.datasets as datasets

import torchvision.transforms as transforms

transform = transforms.Compose([

transforms.CenterCrop(178),

transforms.Resize(128),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

celeba_dataset = datasets.CelebA(root='./data', split='train', transform=transform, download=True)

Code for loading CelebA dataset using PyTorch torchvision package. Retrieved fromhttps://pytorch.org/vision/stable/generated/torchvision.datasets.CelebA.html#torchvision.datasets.CelebA on 22/3/2023.

10. PASCAL VOC

The VOC dataset (Visual Object Classes) was first introduced in 2005 as part of the PASCAL VOC Challenge, which aimed at advancing the state of the art in visual recognition. It consists of images of 20 different object categories, including animals, vehicles, and common household objects. Each of these images is annotated with the locations and classifications of objects within the image. The annotations include both bounding boxes and pixel-level segmentation masks.

The dataset is split into two main sets: the training and validation sets. The training set contains approximately 5,000 images with annotations, while the validation set contains around 5,000 images without annotations. In addition, the dataset also includes a test set with approximately 10,000 images, but the annotations for this set are not publicly available.

To access the recent dataset, you can download from the

import torch

import torchvision

from torchvision import transforms

# Define transformations to apply to the images

transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

# Load the train and validation datasets

train_dataset = torchvision.datasets.VOCDetection(root='./data', year='2007', image_set='train', transform=transform)

val_dataset = torchvision.datasets.VOCDetection(root='./data', year='2007', image_set='val', transform=transform)

# Create data loaders

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=32, shuffle=True)

val_loader = torch.utils.data.DataLoader(val_dataset, batch_size=32, shuffle=False)

Code for loading PASCAL VOC dataset using PyTorch torchvision package. Retrieved from https://pytorch.org/vision/stable/generated/torchvision.datasets.VOCDetection.html#torchvision.datasets.VOCDetection on 22/3/2023.

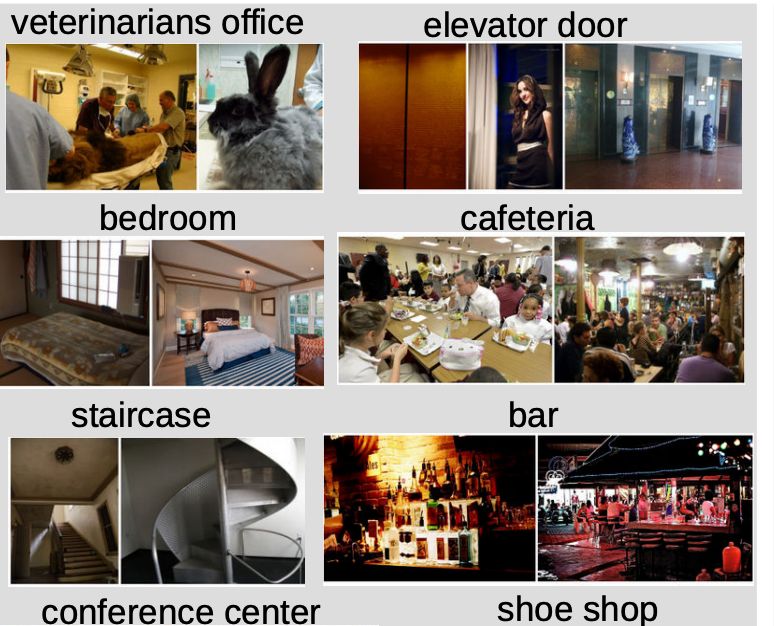

11. Places365

The Places365 dataset is a large-scale scene recognition dataset with more than 1.8 million images covering 365 scene categories. The Places365 Standard dataset consists of around 1.8 million images, while the Places365-Challenge dataset contains 50,000 additional validation images that are more challenging for recognition models.

To access this dataset, you can use

import torch

import torchvision

from torchvision import transforms

# Define transformations to apply to the images

transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

# Load the train and validation datasets

train_dataset = torchvision.datasets.Places365(root='./data', split='train-standard', transform=transform)

val_dataset = torchvision.datasets.Places365(root='./data', split='val', transform=transform)

# Create data loaders

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=32, shuffle=True)

val_loader = torch.utils.data.DataLoader(val_dataset, batch_size=32, shuffle=False)

Code for loading Places365 dataset using PyTorch torchvision package. Retrieved from https://pytorch.org/vision/stable/generated/torchvision.datasets.Places365.html#torchvision.datasets.Places365 on 22/3/2023.

Common Use Cases for Torchvision Datasets

Final Thoughts

Torchvision datasets are often used for training and evaluating machine learning models such as convolutional neural networks (CNNs), which are commonly used in computer vision applications.

They are also available for anyone to download and use freely.

The lead image of this article was generated via HackerNoon's AI Stable Diffusionmodel using the prompt 'thousands of images organized together in small frames'.

More Dataset Listicles: