The two most widely-used open-source machine learning frameworks for training and building deep learning models are TensorFlow and PyTorch.

These frameworks have unique differences in their approach to building and training models. While TensorFlow uses a static graph and declarative programming, which provides better optimization opportunities and allows for distributed training, PyTorch makes use of a dynamic computational graph and imperative programming, which allows for a more flexible and easy debugging process. The choice of frameworks depends on the user's specific needs and preferences.

This article looks at the Best PyTorch Datasets for Building Deep Learning Models available today.

Ultimate List of Open PyTorch Datasets

1. Penn Treebank

The Penn Treebank is a collection of annotated English text, which is extensively studied in the natural language processing (NLP) research community. It comprises over 4.5 million words of text from various genres such as magazines, news articles and fictional stories. The dataset also consists of manually annotated information about name entitles, part of speech tags and syntactic structure, used to train and evaluate a wide range of NLP models including language models, parsers and machine translation systems.

To download this dataset, click

2. Stanford Question Answering Dataset (SQuAD)

The SQuAD (Stanford Question Answering Dataset) is a popular benchmark dataset in natural language processing (NLP) that comprises more than 100,000 question-answer pairs, extracted from a set of Wikipedia articles. It is used to evaluate the performance of various NLP models built using PyTorch or other deep learning frameworks. The answer spans have an average length of 3.6 words, and there are 11.0 words in the corresponding passages on average.

Here are some extra details about the SQuAD:

The goal of the dataset is to provide a challenging task for machine learning models to answer questions about a given text passage. Click here to download the

3. Lung Image Database Consortium and Image Database Resource Initiative (LIDC-IDRI)

This PyTorch dataset is a public dataset of computed tomography images of the chest that has been annotated for lung nodules by multiple radiologists. It comprises 1,018 CT scans collected from various institutions and contains over 23,000 annotated nodules.

Each scan in the dataset is accompanied by annotations from four experienced radiologists which provide information on shape, size, nodule location and texture. The dataset was created to support research into the development of computer-aided diagnosis (CAD) systems for lung cancer screening and diagnosis.

Note: The dataset is publicly available but subject to certain restrictions and requirements for usage.

Click

4. Fashion-MNIST

The PyTorch Fashion MNIST dataset was created by Zalando Research as a replacement for the original MNIST dataset and is available in a PyTorch-compatible format. The PyTorch Fashion MNIST dataset comprises 70,000 grayscale images of clothing items, including 60,000 training images and 10,000 test images.

The images are 28x28 pixels in size and represent 10 different classes of clothing items, including T-shirts/tops, trousers, pullovers, dresses, coats, sandals, shirts, sneakers, bags, and ankle boots. It is similar to the original MNIST dataset, but with more challenging classification tasks due to the greater complexity and variety of the clothing items.

The dataset can be downloaded

5. Yelp Reviews

The Yelp Reviews dataset is an extensive collection of over 5 million reviews of local businesses from 11 metropolitan areas in the United States. Each review in the dataset contains information such as the star rating, business category, review text, date, and location. It is a valuable resource interested in building deep learning models with PyTorch.

Please sign up and click here to download thedataset.

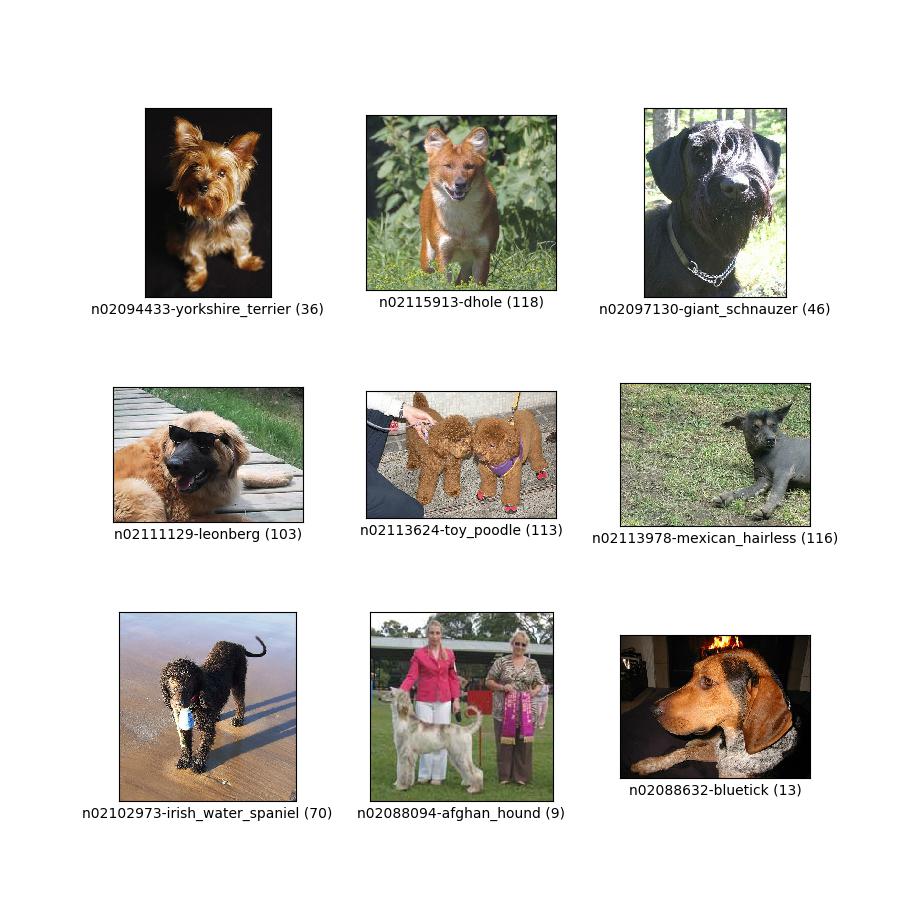

6. Stanford Dogs

This PyTorch Dataset comprises a collection of 20,580 high-quality images of 120 different breeds of dogs, each labelled with information about the breed of the dog in the image. It can be used for image classification and object recognition. With the dataset provided in JPEG format and annotations in a text file, the images are of size 224x224 pixels. The breeds in the dataset range from common breeds such as Golden Retrievers and Labradors to more obscure breeds such as the Otterhound and the Sussex Spaniel.

You can download the

7. Caltech 101

While the limited number of images per category can be a challenge, the detailed annotations make the PyTorch Caltech 101 dataset a valuable resource for evaluating deep learning models. The dataset is a labelled computer vision dataset with 9,144 high-quality images of objects across 101 categories. It also covers a wide range of object categories and the images were obtained from various sources. Each image is labelled with the category of object and image, which makes it simpler to use with a variety of deep learning frameworks.

Click here to

8. STS-B (Semantic Textual Similarity Benchmark)

The STS-B (Semantic Textual Similarity Benchmark) dataset is an English dataset used in the STS tasks organized in the context of SemEval between 2012 and 2017. It comprises 8628 sentence pairs with human-assigned similarity scores on a scale of 1 to 5. Drawn from various sources, like news articles, forum posts, images with captions and covering a wide range of topics, it is a popular dataset used with PyTorch for evaluating models’ performance in determining the semantic similarity between two sentences. The STS-B dataset is available in multiple formats, including PyTorch-compatible formats, as PyTorch is a deep-learning framework for training and evaluating models on this dataset.

\To download this dataset, click

9. WMT'14 English-German

This PyTorch dataset is a benchmark dataset for machine translation between English and German created by Stanford in 2015. It comprises parallel corpora of sentence-aligned texts in both English and German, which are used to build and evaluate deep learning models. While the test sets contain 3,000 sentence pairs each, the training set consists of approximately 4.5 million sentence pairs and the average sentence length is 26 words in English and 30 words in German, with a vocabulary size of about 160,000 words for English and 220,000 words for German.

You can scroll down and download it

10. CelebA

This dataset is a popular large-scale face attributes dataset which comprises over 200,000 celebrity images. It was first released by researchers at the Chinese University of Hong Kong in 2015. An image in the CelebA consists of 40 facial attributes such as age, hair colour, facial expression and gender. Also, these images were retrieved from the internet and cover a wide range of facial appearances, including different races, ages and genders. Bounding box annotations for the location of the face in each image, as well as 5 landmark points for the eyes, nose and mouth.

Note: The CelebA dataset is under the license of the Creative Commons Attribution-Noncommercial-Share, which permits it to be used for non-commercial research purposes as long as proper credit is given.

To use the CelebA dataset in PyTorch, you can use the torchvision.datasets.CelebA class, which is part of the torchvision module. You can download the dataset from the

11. UCF101

The UFC101 dataset is widely used for video classification in the field of computer vision. It comprises 13,230 videos of human actions from 101 action categories, each containing around 100 to 300 videos. The PyTorch UCF101 dataset is a preprocessed version of the original UCF101 that is ready to use in PyTorch. The preprocessed dataset comprises video frames that have been normalized and resized, including corresponding labels for each video. It’s also split into three sets: training, validation and testing, with approximately 9,500, 3,500 and 3,000 videos, respectively.

To download the dataset, click

12. HMDB51

The HMDB51 dataset is a collection of videos retrieved from collected from various sources, including movies, TV shows and online videos, comprising 51 action classes, each with at least 101 video clips. It was created by researchers at the University of Central Florida in 2011 for research in human action recognition. The videos are in AVI format and have a resolution of 320x240 pixels, with ground-truth annotations for each video, including the action class label and the start and end frames of the action within the video. Each video in the dataset represents a person performing an action in front of a static camera. The actions include a wide range of activities every day, such as jumping, waving, drinking, and brushing teeth, as well as complex actions like playing guitar and horseback riding.

Note: It is used in concomitance with the

You can download the dataset

13. ActivityNet

The ActivityNet is a large-scale video understanding dataset that comprises over 20,000 videos from a diverse set of categories like cooking, sports, dancing etc. The videos have an average length of 3 minutes and are annotated with an average of 1.41 activity segments. It is available in PyTorch, which is easy to use in deep learning frameworks. The PyTorch version offers preprocessed features extracted from the RGB frames and optical flow fields of each video, as well as ground truth annotations for the temporal segments and labels of activity.

You can download the dataset

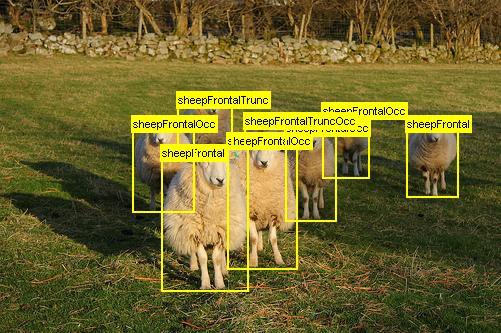

14. VOC dataset (Visual Object Classes)

The VOC dataset (Visual Object Classes) was first introduced in 2005 as part of the PASCAL VOC Challenge, which aimed at advancing the state of the art in visual recognition. It consists of images of 20 different object categories, including animals, vehicles, and common household objects. Each of these images is annotated with the locations and classifications of objects within the image. The annotations include both bounding boxes and pixel-level segmentation masks. The dataset is split into two main sets: the training and validation sets. The training set contains approximately 5,000 images with annotations, while the validation set contains around 5,000 images without annotations. In addition, the dataset also includes a test set with approximately 10,000 images, but the annotations for this set are not publicly available.

To download the recent dataset, you can download it from the

15. YCB-Video

This dataset is a collection of 3D object models and video sequences designed for object recognition and pose estimation tasks. It contains 21 everyday household items, with each object captured in various lighting conditions and camera viewpoints. The dataset provides pixel-level ground truth annotations and is commonly used for evaluating computer vision algorithms and robotic systems.

Click here to download the

16. KITTI

The KITTI dataset is a collection of computer vision data for autonomous driving research. It includes over 4000 high-resolution images, LIDAR point clouds, and sensor data from a car equipped with various sensors. The dataset provides annotations for object detection, tracking, and segmentation, as well as depth maps and calibration parameters. The KITTI dataset is widely used for training and evaluating deep learning models for autonomous driving and robotics.

To download the recent dataset, you can download it from the

17. BraTS

The BRATS PyTorch dataset is a collection of magnetic resonance imaging (MRI) scans for brain tumour segmentation. It consists of over 200 high-resolution 3D brain images, each with four modalities (T1, T1c, T2, and FLAIR) and corresponding binary segmentation masks. The dataset is commonly used for training and evaluating deep learning models for automated brain tumour detection and segmentation.

You can download this dataset on Kaggle by clicking

18. Multi-Human Parsing

The Multi-Human Parsing PyTorch dataset is a large-scale human image dataset with pixel-level annotations for human part parsing. It contains over 26,000 images of humans, each segmented into 18 human part labels. The dataset is used for training and evaluating deep learning models for human pose estimation, segmentation and action recognition.

To download the dataset, click

19. Charades

This dataset is a large-scale video dataset for action recognition and localization. It comprises over 9,800 videos of daily activities, such as cooking, cleaning, and socializing, with an average length of 30 seconds per video. The dataset provides detailed annotations for each video, including temporal boundaries for actions and atomic visual concepts, making it suitable for training and evaluating deep learning models for action recognition, detection and segmentation.

The Charades PyTorch Dataset is widely used in the computer vision research community and is freely available for

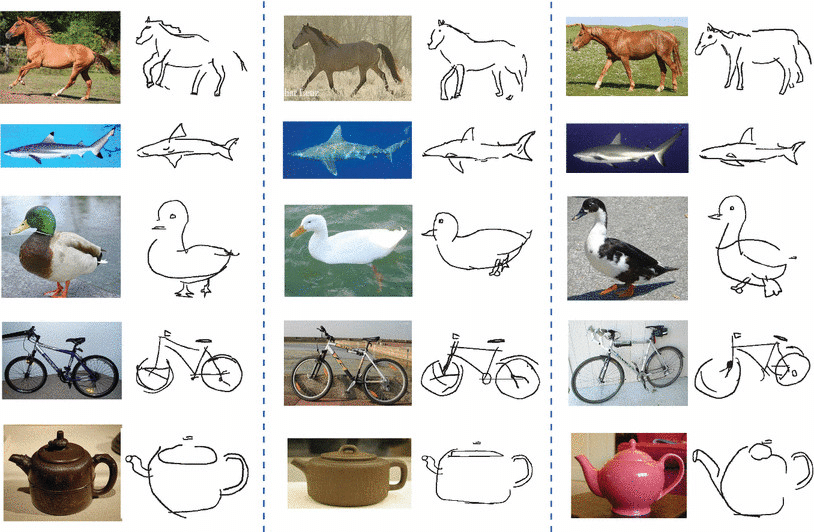

20. TU Berlin

This dataset is a rich collection of high-resolution images and 3D object poses for object detection and pose estimation. It contains over 11,000 images of 60 object categories, with annotations for 2D and 3D poses. With its large size and diverse object categories, the TU Berlin PyTorch dataset provides an excellent testbed for developing robust and accurate object detection and pose estimation models.

You can get the dataset directly from the website by clicking

Common Use Cases for PyTorch Datasets

Natural Language Processing

- Penn Treebank

- Stanford Question Answering Dataset (SQuAD)

- STS-B (Semantic Textual Similarity Benchmark)

- WMT'14 English-German

- Yelp Reviews

Computer Vision

- CelebA

- Caltech 101

- Stanford Dogs

- YCB-Video

- VOC dataset (Visual Object Classes)

- KITTI

- Multi-Human Parsing

- Charades

- TU Berlin

- Fashion-MNIST

Medical Image Analysis

Human Activity Recognition

Final Thoughts

PyTorch is useful for research and experimentation, where the focus is often on developing deep learning models and exploring new approaches. Additionally, PyTorch has gained a reputation as a research-focused framework with a growing community of developers and researchers contributing to the ecosystem.

These datasets have applications in multiple fields and are also available for anyone to download and use freely.

The lead image of this article was generated via HackerNoon's AI Stable Diffusion model using the prompt 'PyTorch superimposed images'.